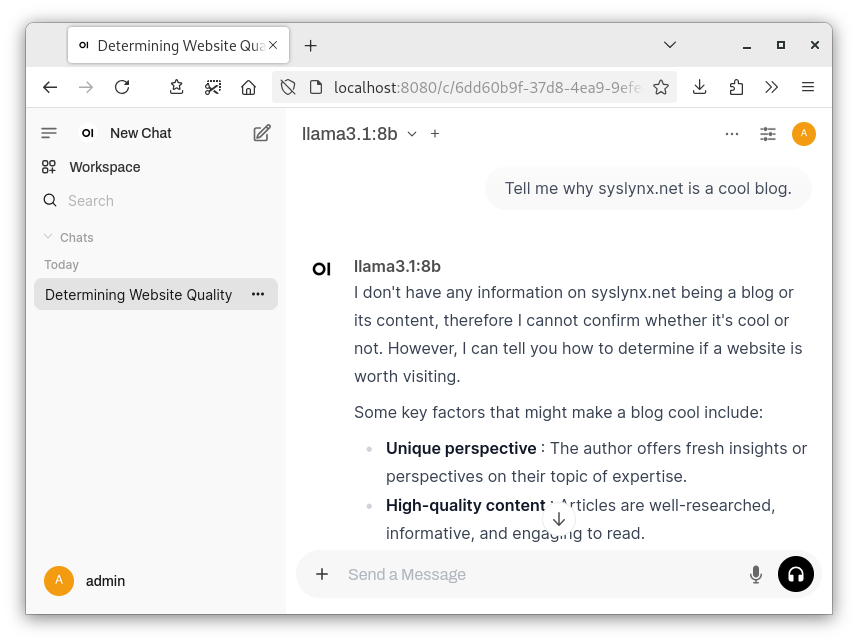

Optimized Local AI on Intel Arc B580 with OpenWebUI and Ollama, using Fedora Linux and Podman

In this guide, I will show you how to utilize a combination of Open WebUI and ollama to run inference and fine tuning on an Intel Arc B580 (or older) GPU. We will be using Intel's IPEX-LLM Pytorch Library for optimal performance.